Public confidence in science has remained high and stable for years. But recent decades have seen incidents of scientific fraud and misconduct, failure to replicate key findings, and growth in the number of retractions – all of which may affect trust in science.

In an article published this week in PNAS, a group of leaders in science research, scholarship, and communication propose that to sustain a high level of trust in science, scientists must more effectively signal to each other and to the public that their work respects the norms of science.

The authors offer a variety of ways in which researchers and journals can communicate this – among them, greater transparency regarding data and methods; ways to certify the integrity of evidence; the disclosure of competing or relevant interests by authors; and wider adoption of tools such as checklists and badges to signal that studies have met accepted scientific standards.

“This absence [of clear signals] is problematic,” write the researchers, including Kathleen Hall Jamieson, professor of communication at the Annenberg School for Communication at the University of Pennsylvania and director of its Annenberg Public Policy Center (APPC). “Without clear signals, other scientists have difficulties ascertaining confidence in the work, and the press, policy makers, and the public at large may base trust decisions on inappropriate grounds, such as deeply held and irrational biases, nonscientific beliefs, and misdirection by conflicted stakeholders or malicious actors.”

In addition to Jamieson, “Signaling the trustworthiness of science,” published in the Proceedings of the National Academy of Sciences of the United States of America, was written by Marcia McNutt, president of the National Academy of Sciences; Veronique Kiermer, executive editor, Public Library of Science (PLOS); and Richard Sever, assistant director, Cold Spring Harbor Laboratory, and co-founder, bioRxiv. The order of authorship was determined by a coin toss.

The American public and trust in science

The authors write that “science is trustworthy in part because it honors its norms.” These norms include a reliance on statistics; having conclusions that are supported by data; disinterestedness, as seen through the disclosure of potential competing interests; validation by peer review; and ethical treatment of research subjects and animals.

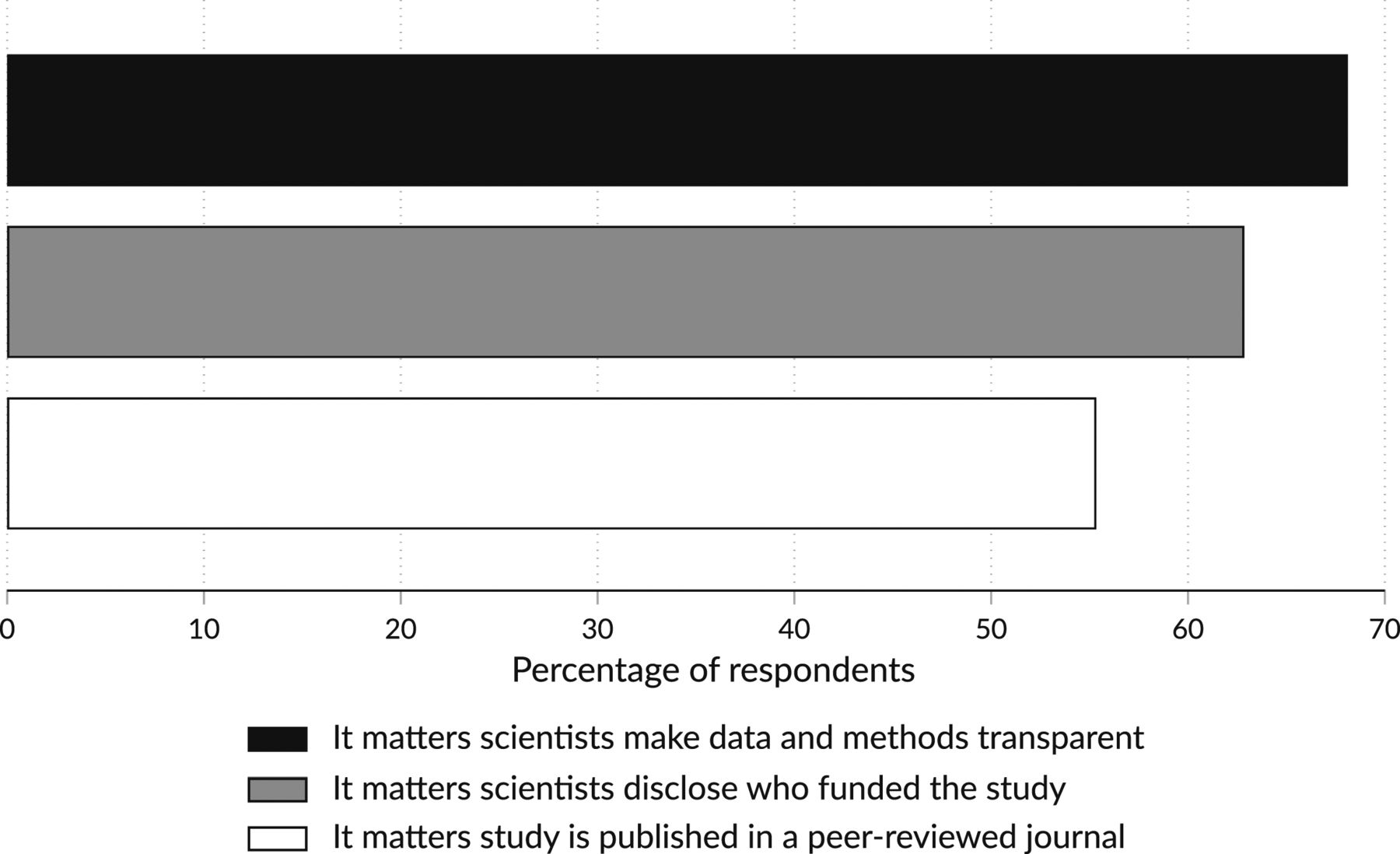

Adherence to these norms increases not just the reliability of the scientific findings, but the likelihood that the public will perceive science itself as reliable. The article cites a 2019 survey of 1,253 U.S. adults conducted by APPC, which found that in deciding whether to believe a scientific finding, 68% of those surveyed said it matters whether the scientists make their data and methods available and are completely transparent about their methods. In addition, 63% said it matters whether the scientists involved in the study disclose the individuals and organizations that funded their work, and 55% said it mattered whether the study has been published in a peer-reviewed journal (Fig. 1). For further details, see the article.

Communicating norms

To support trust in science, the authors suggest that scientists need to communicate practices that reinforce the norms, among them:

- Have scientists explain the evidence and process by which they came to reconsider and change their views on a scientific issue;

- Have journals add links back and forward to retractions and expressions of editorial concern to more clearly indicate unreliability, and to studies that replicate findings to emphasize reliability;

- Adopt nuanced signaling language that more clearly explains the process now often called “retraction,” using terms such as “voluntary withdrawal” and “withdrawal for cause” or “editorial withdrawal.” Similarly, replace “conflict of interest” with a more neutral, broadened term such as “relevant interest.”

The authors also call for steps to show that individual studies adhere to these norms, such as:

- A more refined and standardized series of checklists should be used by journals to show how they protect evidence-gathering and -reporting, including more detailed information about each author’s contributions to a manuscript;

- Badges such as those used by the Center for Open Science should be more widely adopted to show, for instance, whether scientists on a study have preregistered their hypothesis and met standards for open data and open materials. (Fig. 2)

The recommendations were developed following an April 2018 conference called “Signals of Trust in Science Communication” which was convened by Cold Spring Harbor Laboratory at the Banbury Center and organized by McNutt, Sever, and Jamieson.

“Science enjoys a relatively high level of public trust,” the authors write. “To sustain this valued commodity, in our increasingly polarized age, scientists and the custodians of science would do well to signal to other researchers and to the public and policy makers the ways in which they are safeguarding science’s norms and improving the practices that protect its integrity as a way of knowing.

“Embedding signals of trust in the reporting of individual studies can help researchers build their peers’ confidence in their work,” they continue. “Publishing platforms that rigorously apply these signals of trust can increase their standing as trustworthy vehicles. But beyond this peer-to-peer communication, the research community and its institutions also can signal to the public and policy makers that the scientific community itself actively protects the trustworthiness of its work.”

Download this news release here.