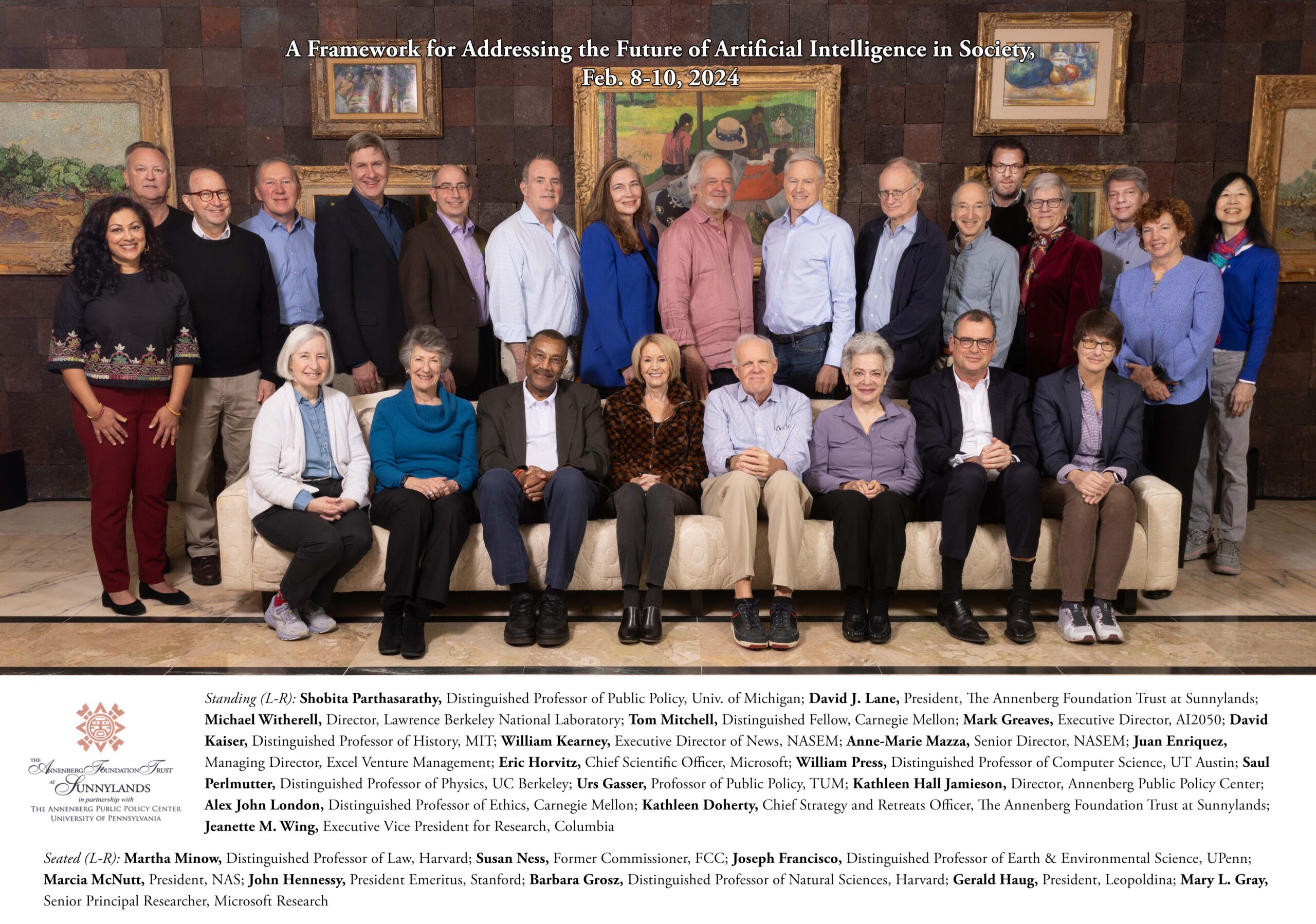

In an editorial titled “Protecting scientific integrity in an age of generative AI,” published today in the Proceedings of the National Academy of Sciences (PNAS), an interdisciplinary group of experts urges the scientific community to follow five principles of human accountability and responsibility when using artificial intelligence in research. The editorial is the byproduct of two-stage retreat convened by National Academy of Sciences (NAS) President Marcia McNutt and Annenberg Public Policy Center (APPC) Director and Sunnylands Program Director Kathleen Hall Jamieson, hosted virtually by APPC November 29-30, 2023, and at Sunnylands in Rancho Mirage, Calif., February 9-12, 2024.

The APPC, NAS, and the Annenberg Foundation Trust at Sunnylands retreats aimed to explore emerging challenges posed by the use of AI in research and chart a path forward for the scientific community. The retreatants’ deliberations were informed by presentations from Nobel Laureates Harold Varmus and David Baltimore about efforts by the scientific community to deal with the challenges posed by potential pandemic pathogens and emergent technologies such as gene editing.

The APPC, NAS, and the Annenberg Foundation Trust at Sunnylands retreats aimed to explore emerging challenges posed by the use of AI in research and chart a path forward for the scientific community. The retreatants’ deliberations were informed by presentations from Nobel Laureates Harold Varmus and David Baltimore about efforts by the scientific community to deal with the challenges posed by potential pandemic pathogens and emergent technologies such as gene editing.

Since 2015, these partner organizations have convened more than a dozen retreats. At these convenings, leaders in science, academia, business, medical ethics, the judiciary and the bar, government, and scientific publishing explored ways to increase the integrity of science; articulate the ethical principles that should guide scientific practice to ensure that science works at the frontiers of human knowledge in an ethical way; and protect the courts from inadvertent as well as deliberate misstatements about scientific knowledge.

The AI retreat discussions were informed by papers commissioned by APPC and digested in Issues in Science and Technology that explored various aspects of AI and its use and governance. The papers will form the core of a forthcoming publication by the University of Pennsylvania Press.

“The model used for the AI retreats was drawn from the one we successfully used for the Academies’ consideration of emerging human genome editing technologies,” said Jamieson. “NAS-Sunnylands-APPC 2018 and 2021 convenings paved the way for the international summits on human genome editing in Hong Kong in November 2018 and in London in March 2023.”

In the May 2024 editorial in PNAS, the authors emphasize that advances in generative AI represent a transformative moment for science – one that will accelerate scientific discovery but also challenge core norms and values of science, such as accountability, transparency, replicability, and human responsibility. The authors also call for the establishment of a Strategic Council on the Responsible Use of AI in Science to provide ongoing guidance and oversight on responsibilities and best practices as the technology evolves.

The group of 24 retreatants included experts in behavioral and social sciences, ethics, biology, physics, chemistry, mathematics, and computer science, as well as leaders in higher education, law, governance, and science publishing and communication. Among them (pictured below) were Joseph Francisco, Distinguished Professor of Earth and Environmental Science at Penn, and APPC Director Jamieson.

“We welcome the advances that AI is driving across scientific disciplines, but we also need to be vigilant about upholding long-held scientific norms and values,” said National Academy of Sciences President Marcia McNutt, one of the co-authors of the editorial. “We hope our paper will prompt reflection among researchers and set the stage for concerted efforts to protect the integrity of science as generative AI increasingly is used in the course of research.”

Five Recommended Principles When Using AI in Research

In the editorial, the authors urge the scientific community to adhere to five principles when conducting research that uses AI:

- Transparent disclosure and attribution;

- Verification of AI-generated content and analyses;

- Documentation of AI-generated data;

- A focus on ethics and equity; and

- Continuous monitoring, oversight, and public engagement.

For each principle, the authors identify specific actions that should be taken by scientists, those who create models that use AI, and others. For example, for researchers, transparent disclosure and attribution includes steps such as clearly disclosing the use of generative AI in research — including the specific tools, algorithms, and settings employed — and accurately attributing the human and AI sources of information or ideas. For model creators and refiners, it means actions such as providing publicly accessible details about models, including the data used to train or refine them.

The proposed strategic council should be established by the National Academies of Sciences, Engineering, and Medicine, the authors recommended, and should coordinate with the scientific community and provide regularly updated guidance on the appropriate uses of AI. The council should study, monitor, and address the evolving use of AI in science; new ethical and societal concerns, including equity; and emerging threats to scientific norms. It should also share its insights across disciplines and develop and refine best practices.