New analyses from the Annenberg Public Policy Center find that public perceptions of scientists’ credibility – measured as their competence, trustworthiness, and the extent to which they are perceived to share an individual’s values – remain high, but their perceived competence and trustworthiness eroded somewhat between 2023 and 2024. The research also found that public perceptions of scientists working in artificial intelligence (AI) differ from those of scientists as a whole.

From 2018-2022, the Annenberg Public Policy Center (APPC) of the University of Pennsylvania relied on national cross-sectional surveys to monitor perceptions of science and scientists. In 2023-24, APPC moved to a nationally representative empaneled sample to make it possible to observe changes in individual perceptions.

The February 2024 findings, released today to coincide with the address by National Academy of Sciences President Marcia McNutt on “The State of the Science,” come from an analysis of responses from an empaneled national probability sample of U.S. adults surveyed in February 2023 (n=1,638 respondents), November 2023 (n=1,538), and February 2024 (n=1,555).

Drawing on the 2022 cross-sectional data, in an article titled “Factors Assessing Science’s Self-Presentation model and their effect on conservatives’ and liberals’ support for funding science,” published in Proceedings of the National Academy of Sciences (September 2023), Annenberg-affiliated researchers Yotam Ophir (State University of New York at Buffalo and an APPC distinguished research fellow), Dror Walter (Georgia State University and an APPC distinguished research fellow), and Patrick E. Jamieson and Kathleen Hall Jamieson of the Annenberg Public Policy Center isolated factors that underlie perceptions of scientists (Factors Assessing Science’s Self-Presentation, or FASS). These factors predict public support for increased funding of science and support for federal funding of basic research.

The five factors in FASS are whether science and scientists are perceived to be credible and prudent, and whether they are perceived to overcome bias, correct error (self-correcting), and whether their work benefits people like the respondent and the country as a whole (beneficial). In a 2024 publication titled “The Politicization of Climate Science: Media Consumption, Perceptions of Science and Scientists, and Support for Policy” (May 26, 2024) in the Journal of Health Communication, the same team showed that these five factors mediate the relationship between exposure to media sources such as Fox News and outcomes such as belief in anthropogenic climate change, perception of the threat it poses, and support for climate-friendly policies such as a carbon tax.

Speaking about the FASS model, Jamieson, director of the Annenberg Public Policy Center and director of the survey, said that “because our 13 core questions reliably reduce to five factors with significant predictive power, the ASK survey’s core questions make it possible to isolate both stability and changes in public perception of science and scientists across time.” (See the appendix for the list of questions.)

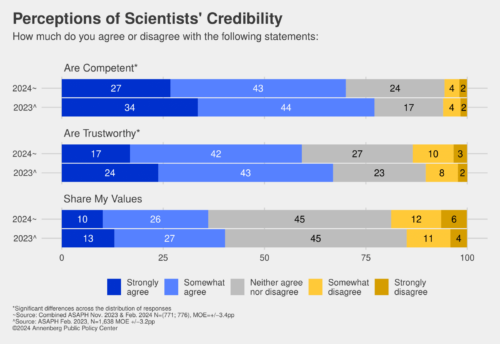

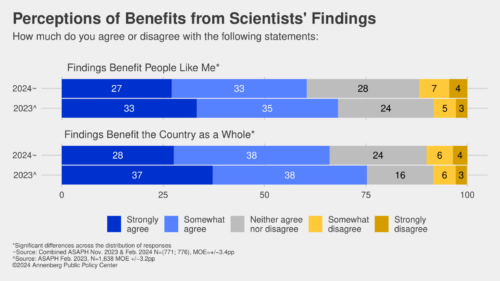

The new research finds that while scientists are held in high regard, two of the three dimensions that make up credibility – perceptions of competence and trustworthiness – showed a small but statistically significant drop from 2023 to 2024, as did both measures of beneficial. The 2024 survey data also indicate that the public considers AI scientists less credible than scientists in general, with notably fewer people saying that AI scientists are competent and trustworthy and “share my values” than scientists generally.

“Although confidence in science remains high overall, the survey reveals concerns about AI science,” Jamieson said. “The finding is unsurprising. Generative AI is an emerging area of science filled with both great promise and great potential peril.”

The data are based on Annenberg Science Knowledge (ASK) waves of the Annenberg Science and Public Health (ASAPH) surveys conducted in 2023 and 2024. The findings labeled 2023 are based on a February 2023 survey, and the findings labeled 2024 are based on combined ASAPH survey half-samples surveyed in November 2023 and February 2024.

For further details, download the toplines and a series of figures that accompany this summary.

Perceptions of scientists overall

In the FASS model, perceptions of scientists’ credibility are assessed through perceptions of whether scientists are competent, trustworthy, and “share my values.” The first two of those values slipped in the most recent survey. In 2024, 70% of those surveyed strongly or somewhat agree that scientists are competent (down from 77% in 2023) and 59% strongly or somewhat agree that scientists are trustworthy (down from 67% in 2023). (See figure 1, and figs. 2-4 for other findings.)

The survey also found that in 2024, fewer people felt that scientists’ findings benefit “the country as a whole” and “benefit people like me.” In 2024, 66% strongly or somewhat agreed that findings benefit the country as a whole (down from 75% in 2023). Belief that scientists’ findings “benefit people like me,” also declined, to 60% from 68%. Taken together those two questions make up the beneficial factor of FASS. (See fig. 5.)

The findings follow sustained attacks on climate and Covid-19-related science and, more recently, public concerns about the rapid development and deployment of artificial intelligence.

Comparing perceptions of scientists in general with climate and AI scientists

Credibility: When asked about three factors underlying scientists’ credibility, AI scientists have lower credibility in all three values. (See fig. 6.)

- Competent: 70% strongly/somewhat agree that scientists are competent, but only 62% for climate scientists and 49% for AI scientists.

- Trustworthy: 59% agree scientists are trustworthy, 54% agree for climate scientists, 28% for AI scientists.

- Share my values: A higher number (38%) agree that climate scientists share my values than for scientists in general (36%) and AI scientists (15%). More people disagree with this for AI scientists (35%) than for the others.

Prudence: Asked whether they agree or disagree that science by various groups of scientists “creates unintended consequences and replaces older problems with new ones,” over half of those surveyed (59%) agree that AI scientists create unintended consequences and just 9% disagree. (See fig. 7.)

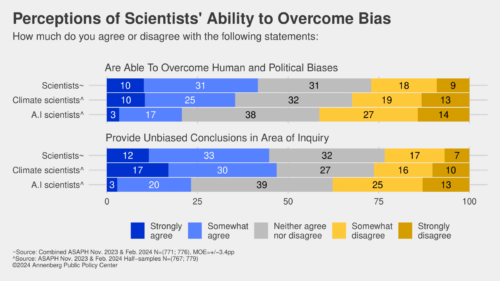

Overcoming bias: Just 42% of those surveyed agree that scientists “are able to overcome human and political biases,” but only 21% feel that way about AI scientists. In fact, 41% disagree that AI scientists are able to overcome human political biases. In another area, just 23% agree that AI scientists provide unbiased conclusions in their area of inquiry and 38% disagree. (See fig. 8.)

Self-correction: Self-correction, or “organized skepticism expressed in expectations sustaining a culture of critique,” as the FASS paper puts it, is considered by some as a “hallmark of science.” AI scientists are seen as less likely than scientists or climate scientists to take action to prevent fraud; take responsibility for mistakes; or to have mistakes that are caught by peer review. (See fig. 9.)

Benefits: Asked about the benefits from scientists’ findings, 60% agree that scientists’ “findings benefit people like me,” though just 44% agree for climate scientists and 35% for AI scientists. Asked about whether findings benefit the country as a whole, 66% agree for scientists, 50% for climate scientists and 41% for AI scientists. (See fig. 10.)

Your best interest: The survey also asked respondents how much trust they have in scientists to act in the best interest of people like you. (This specific trust measure is not a part of the FASS battery.) Respondents have less trust in AI scientists than in others: 41% have a great deal/a lot of trust in medical scientists; 39% in climate scientists; 36% in scientists; and 12% in AI scientists. (See fig. 11.)

The data from ASK surveys have been used to date in four peer-reviewed papers:

- Using 2019 ASK data: Jamieson, K. H., McNutt, M., Kiermer, V., & Sever, R. (2019). Signaling the trustworthiness of science. Proceedings of the National Academy of Sciences, 116(39), 19231-19236.

- Using 2022 ASK data: Ophir, Y., Walter, D., Jamieson, P. E., & Jamieson, K. H. (2023). Factors Assessing Science’s Self-Presentation model and their effect on conservatives’ and liberals’ support for funding science. Proceedings of the National Academy of Sciences, 120(38), e2213838120.

- Using 2024 ASK data: Lupia, A., Allison, D. B., Jamieson, K. H., Heimberg, J., Skipper, M., & Wolf, S. M. (2024). Trends in US public confidence in science and opportunities for progress. Proceedings of the National Academy of Sciences, 121(11), e2319488121.

- Using Nov 2023 and Feb 2024 ASK data: Ophir, Y., Walter, D., Jamieson, P. E., & Jamieson, K. H. (2024). The politicization of climate science: Media consumption, perceptions of science and scientists, and support for policy. Journal of Health Communication, 29(sup1): 18-27.

APPC’s ASAPH survey

The survey data come from the 17th and 18th waves of a nationally representative panel of U.S. adults, first empaneled in April 2021, conducted for the Annenberg Public Policy Center by SSRS, an independent market research company. These waves of the Annenberg Science and Public Health (ASAPH) knowledge survey were fielded February 22-28, 2023, November 14-20, 2023, and February 6-12, 2024, and have margins of sampling error (MOE) of ± 3.2, 3.3 and 3.4 percentage points at the 95% confidence level. In November 2023, half of the sample was asked about “scientists” and the other half “climate scientists.” In February 2024, those initially asked about “scientists” were asked about “scientists studying AI” and the other half “scientists.” This provided two half samples addressing specific areas of study, while all panelists were asked about “scientists” generally. All figures are rounded to the nearest whole number and may not add to 100%. Combined subcategories may not add to totals in the topline and text due to rounding.

Download the toplines, figures, and methodology statements.

The policy center has been tracking the American public’s knowledge, beliefs, and behaviors regarding vaccination, Covid-19, flu, maternal health, climate change, and other consequential health issues through this survey panel for over three years. In addition to Jamieson, the APPC team includes Shawn Patterson Jr., who analyzed the data; Patrick E. Jamieson, director of the Annenberg Health and Risk Communication Institute, who developed the questions; and Ken Winneg, managing director of survey research, who supervised the fielding of the survey.