Misinformation is proliferating across the globe, but research into its nature and origins is often disconnected from the tools and techniques used to combat it.

Now, a team of nonprofit, academic, industry, and fact-checking organizations has received a 12-month, $750,000 grant from the National Science Foundation’s Convergence Accelerator to create a new platform to narrow the gap between research into misinformation and responses designed to curb it. The project’s initial focus will address misinformation in partnership with Asian American and Pacific Islander (AAPI) communities.

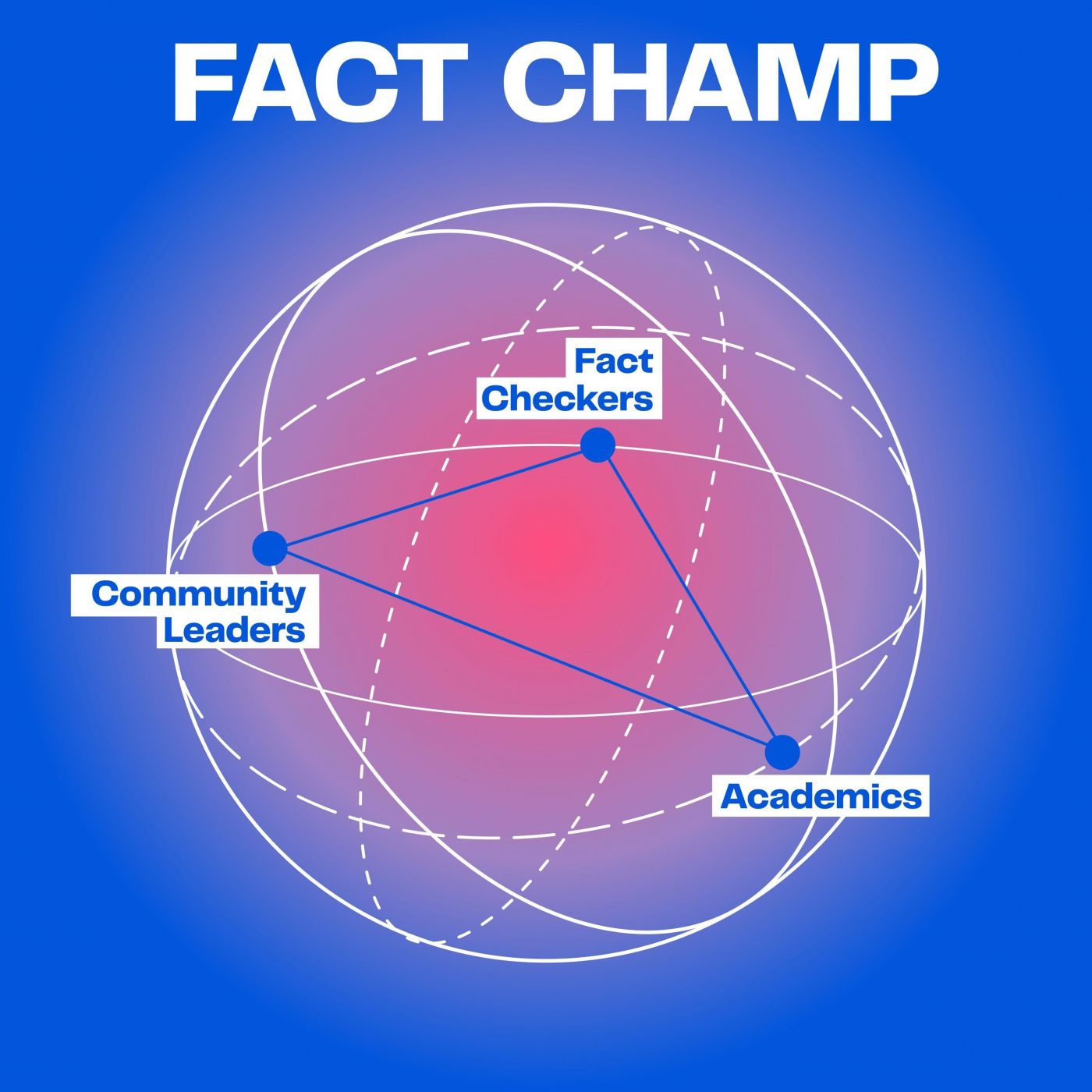

The Annenberg Public Policy Center (APPC) and its FactCheck.org project are among the participants in the collaboration, called FACT CHAMP. Other partners are Meedan, a technology nonprofit focused on fact-checking tools; the University of Massachusetts Amherst, the University of Connecticut, and Rutgers University; and AuCoDe (Automated Controversy Detection), a start-up that uses artificial intelligence to detect and analyze disinformation.

FACT CHAMP stands for Fact-checker, Academic, and Community Collaboration Tools: Combating Hate, Abuse, and Misinformation with Minority-led Partnerships. The project is designed to advance scientific understanding of how trust, misinformation, abuse, and hateful content affect underrepresented groups. The project is one of the 2021 cohort of Phase I projects on Trust & Authenticity in Communications Systems (Track F) within the NSF Convergence Accelerator.

“Problems faced day-to-day by fact-checkers are inspiring new research challenges and can open up new datasets for research,” said Scott Hale, the project’s principal investigator and Meedan’s director of research. “At the same time, developments in computer science and social science can create new tools, approaches, and understandings to better counter misinformation in practice.”

“Problems faced day-to-day by fact-checkers are inspiring new research challenges and can open up new datasets for research,” said Scott Hale, the project’s principal investigator and Meedan’s director of research. “At the same time, developments in computer science and social science can create new tools, approaches, and understandings to better counter misinformation in practice.”

Co-PI Kathleen Hall Jamieson, director of the Annenberg Public Policy Center and a cofounder of FactCheck.org, said, “Identifying the misinformation and patterns of deception to which different societal groups are exposed is a prerequisite of effective fact-checking.” Added Jamieson, “The Annenberg Public Policy Center is proud to be part of the sort of cross-disciplinary, cross-institution, cross-sector partnership needed to address this complex, consequential challenge.”

The first phase of this project, conducted over the next year, will involve working closely with leaders in AAPI communities to prototype and design a platform using tip lines, claim-matching, and state-of-the-art controversy detection models to help identify and triage potential misinformation online within and about their communities.

Navigating diverse threats

“This project feels urgent, as it is really an intervention that engages with the tragedies that have spurred the #StopAAPIHate movement,” said Jonathan Corpus Ong, a co-PI and associate professor in global digital media at UMass Amherst. “It’s essential we conduct more multidisciplinary research into how AAPI community members navigate the diverse threats of the contemporary digital environment, from racist conspiracy theories to an extremist right-wing ideology, that prey on the AAPI community’s current state of fear and anxiety.”

Subsequent work in this project will include development of smartphone-based self-help resources for AAPI community leaders and building an infrastructure to securely share data and challenges with researchers who are investigating and addressing the ways misinformation propagates. The infrastructure will also allow academic solutions to be more easily used in practice.

Kiran Garimella, a co-PI and assistant professor at Rutgers University, said, “Working with fact-checking organizations and communities is an exciting opportunity but entails significant coordination costs. I am confident our team can reduce these burdens, making such collaborations easier and paving the way for amazing interdisciplinary projects in this space.”

To ensure the project’s success, the multidisciplinary team will conduct three proof-of-concept activities over the next nine months to determine the best approaches for enabling meaningful collaboration between researchers, fact-checkers, and community leaders to combat hate, abuse, and misinformation. Through these activities, the team will advance research on detection of controversial and hateful content, improve their understanding of hate speech and misinformation, and develop new tools and adapt existing ones to create collaboration infrastructure aligned with the needs of researchers, practitioners, and communities. At the end of Phase I, the team will participate in a formal Phase II proposal and pitch evaluation to proceed to Phase II. If selected into Phase II, the team may receive up to $5 million to further the solution toward real-world application.

To learn more about the project and partners, read the news release at Meedan.